Machine learning and artificial intelligence have experienced unprecedented growth in recent years, fueled by advancements in computing power, data availability, and algorithmic development. This progress has unlocked an array of possibilities, impacted countless industries, and transformed how we live and work.

Defining Artificial Intelligence and Machine Learning

Let’s start by clarifying some basic terms: Artificial Intelligence (AI) and Machine Learning (ML). I could write an entire article on how the two intertwine, but for brevity’s sake, let’s stick to basic definitions:

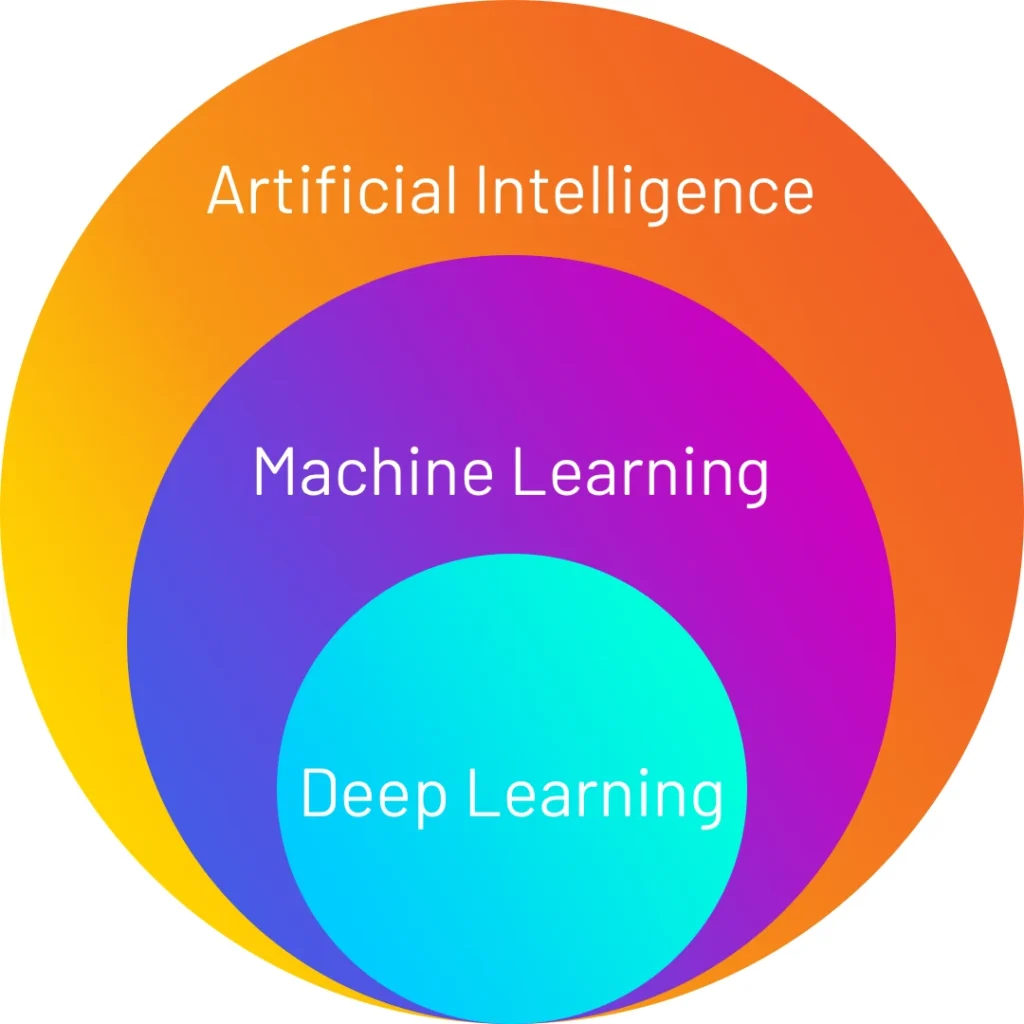

Artificial Intelligence (AI) is, in simple terms, the science of making machines that can think like humans. It is an overarching term that applies to voice assistants (Like Alexa and Siri), chatbots (like ChatGPT and Bard), image generators (like MidJourney), and all sorts of other technologies.

Machine learning (ML) is a branch or subset of AI. Specifically, it is the use and development of technology that learns and adapts without specific instruction, trained by algorithms and statistical models that find patterns in data.

The Challenges of Machine Learning

The Challenges of Machine Learning

Machine learning (ML) has always held the promise of providing software applications and functionality that would be extraordinarily useful. However, developing and training the models used in ML was a daunting undertaking.

Developing the models used by ML takes a special skill set beyond that of the typical software developer. ML engineers not only need to understand software development but also statistical analysis and mathematical data manipulation. ML engineers have strong skills in data science, problem-solving, and communications.

Training the ML models is time- and resource-intensive in order to achieve accurate results. The computing power required to train some ML models is only available today because of the nearly unlimited computing resources available in the cloud. Training an ML model can also require an enormous dataset.

Our Experience with Machine Learning

We have worked on ML projects at Saritasa that required 80,000,000 images to train the model. These images had to be gathered from multiple sources such as Flickr and Wikipedia. In many cases, assembling a dataset large enough to train the model is exceedingly difficult. Because it is sometimes exceedingly difficult to obtain enough images of an object to properly train a model, we worked with a client to assess the feasibility of developing 3D models of an object that would then be used to generate images of this object from all angles. These images then help train an ML model.

Developing and then tweaking the model during training was very resource-intensive as well. Developers spend thousands of hours to get it right. The resources to train the model, and then continue to refine the training as the users add to the data set, consume an enormous amount of compute time in the cloud.

The Introduction of Pre-Trained Models

One of the most significant contributors to this surge in ML capabilities is the rise of pre-trained models. These models come pre-trained on massive datasets encompassing text, images, code, and other forms of data. They have become the backbone of many modern ML applications. With a pre-trained model, all the hard work and computing resources to develop, train, and tune the ML model can be bypassed.

Benefits of Pre-Trained Models:

- Reduced Development Time: Pre-trained models eliminate the need to train complex models from scratch, significantly reducing development time and resources. This allows developers to focus on building applications rather than spending months or even years training a model.

- Improved Performance: Pre-trained models often achieve state-of-the-art performance on various tasks, including image classification, natural language processing, and speech recognition. This allows developers to leverage the power of advanced algorithms without possessing deep expertise in machine learning.

- Resource Efficiency: Training large ML models requires significant computational resources, making it inaccessible to many individuals and organizations. Pre-trained models offer a more efficient approach, requiring less hardware and energy to finetune and deploy.

- Accessibility for Everyone: Pre-trained models democratize AI by making it accessible to those with less technical expertise. Open-source pre-trained models are widely available, allowing anyone to build intelligent applications without extensive ML knowledge.

- Reduced Cost – all the above savings result in lower -ost application development.

Examples of Pre-Trained Models:

Examples of Pre-Trained Models:

- BERT: A pre-trained language model developed by Google AI that excels in various natural language processing tasks, such as sentiment analysis and text summarization.

- GPT-4: A powerful language model developed by OpenAI that can generate realistic and creative text formats, including poems, code, scripts, and musical pieces.

- ResNet: A pre-trained image classification model known for its accuracy and efficiency, widely used in various computer vision applications.

- YOLO: A pre-trained object detection model capable of identifying and localizing multiple objects in an image in real time, making it ideal for tasks like autonomous driving and video surveillance.

Impact on Different Industries:

- Healthcare: Pre-trained models are used in medical imaging to detect diseases, analyze medical data, and personalize treatment plans.

- Finance: Fraud detection, risk assessment, and personalized financial recommendations all benefit from the power of pre-trained models.

- Retail: Personalized product recommendations, targeted marketing campaigns, and improved customer service are just a few ways pre-trained models are transforming the retail industry.

- Manufacturing: Predictive maintenance, quality control, and process optimization are all areas where pre-trained models are driving efficiency and innovation.

The Future of Pre-Trained Models

The Future of Pre-Trained Models

As research and development in pre-trained models continue, we can expect even greater advancements in their capabilities and accessibility. This will further democratize AI and make its potential available to a wider range of individuals and organizations, accelerating innovation and progress across all sectors.

In conclusion, pre-trained models have played a pivotal role in propelling machine learning into the mainstream. Their ability to significantly reduce development time, improve performance, and increase resource efficiency has made AI more accessible than ever before. As these models continue to evolve, we can expect to see even more transformative applications emerge, shaping the future across all aspects of human life.

Recommended for You

Check out related insights from the team

Get empowered, subscribe today

Receive industry insights, tips, and advice from Saritasa.